AI can be perplexing, but it doesn't have to be. This guide breaks down the key terms and concepts, making the world of artificial intelligence easier to navigate. If you’ve ever felt lost in the jargon, this is for you!

Table of Contents

What is AI, Anyway?

Artificial Intelligence (AI) is more than just a buzzword; it’s a branch of computer science aimed at creating systems that can think and learn like humans. Over the years, AI has evolved from a niche research area into a significant technological force that companies are eager to harness.

Today, you’ll find discussions about AI everywhere, from tech blogs to corporate boardrooms. However, the sheer volume of information can make it tricky to grasp what’s being said. Understanding the lingo is key to keeping up with the latest advancements and innovations in the field.

The Evolution of AI

As businesses invest heavily in AI technology, terms can vary widely, leading to confusion. With every new development, companies tend to create unique interpretations of what AI means to them. This can create a confusing landscape for anyone trying to understand how AI impacts different sectors.

To help make sense of it all, let’s break down some common AI terms you’ll encounter.

Core Concepts of AI

Machine Learning

Machine learning is a fundamental aspect of AI that focuses on training systems to learn from data. This subset of AI involves algorithms that analyze historical data to predict outcomes or make decisions about new information.

For instance, when an AI model is trained using vast datasets, it can identify patterns and make informed predictions based on that training. This ability to learn from data is what makes machine learning so powerful and crucial for many AI technologies today.

Artificial General Intelligence (AGI)

Artificial General Intelligence refers to AI that possesses intelligence comparable to or even surpassing that of humans. AGI remains a topic of intense interest and debate within the tech community.

While AGI promises incredible capabilities, it also raises ethical and safety concerns. The prospect of machines achieving superhuman intelligence brings to mind various dystopian narratives from popular culture, which only adds to the conversation's complexity.

Generative AI

Generative AI is another exciting area that refers to technology capable of creating new content, such as text, images, and even code. This form of AI has gained attention for its ability to produce human-like outputs.

Popular tools like ChatGPT and Google’s Gemini exemplify the potential of generative AI. These systems use sophisticated algorithms to generate responses that can sometimes be indistinguishable from those produced by humans.

Hallucinations

Hallucinations in AI do not refer to strange visions but rather to situations when AI systems confidently produce incorrect or nonsensical outputs. This phenomenon occurs because generative AI is only as good as the data it is trained on.

The presence of hallucinations highlights the importance of quality training data. Errors can lead to significant misunderstandings and showcase the limitations of AI models in understanding context or nuances.

Bias in AI

Bias in AI is a significant concern that stems from the data used to train these systems. If the training data contains prejudices, the AI may replicate those biases in its outputs. This issue is particularly evident in applications like facial recognition.

For example, research conducted by Joy Buolamwini and Timnit Gebru highlighted that certain facial recognition technologies had higher error rates when identifying the gender of darker-skinned women. Such findings underscore the ethical responsibilities that come with developing and deploying AI technologies.

Understanding AI Models

What’s an AI Model?

An AI model is essentially a framework trained to perform specific tasks and make decisions independently. These models are crucial in translating the complex workings of AI into practical applications.

Within this category, there are several types of models. Large Language Models (LLMs) like OpenAI’s GPT are designed to process and generate human-like text. Diffusion models, on the other hand, focus on generating images from text prompts.

Foundation models are another important type. These versatile models are trained on vast amounts of data, allowing them to be adapted for various applications without needing specific retraining for each task.

The Training Process

Training is the process through which AI models learn to recognize patterns in data. This involves feeding the models large datasets so they can analyze and understand the information.

Training requires substantial computing power, and many companies rely on powerful GPUs to facilitate this process. The quality and volume of training data significantly influence how well the model performs.

Parameters Explained

Parameters are the internal variables within an AI model that shape its outputs. These variables are crucial because they help determine how the model interprets input data and generates responses.

The number of parameters in a model can serve as a measure of its complexity and capability. The more parameters a model has, the better it can potentially perform at tasks requiring nuanced understanding and generation of data.

Additional Key Terms

Natural Language Processing (NLP)

Natural Language Processing is the technology that enables machines to comprehend human language. This area of AI is essential for applications like ChatGPT, which can understand text queries and generate relevant responses.

NLP capabilities are often enhanced by large datasets that help train models to understand the intricacies of human language, including idioms, slang, and contextual meanings.

Inference

Inference is the task that occurs when an AI generates a response based on a given input. For example, when a user asks ChatGPT for a recipe, the model processes the request and provides a detailed answer.

This step is critical because it represents the practical application of all the training and learning that the model has undergone.

Tokens

Tokens refer to chunks of text, which can be words, parts of words, or even individual characters. AI models break text into tokens to analyze relationships and generate meaningful responses.

The ability to process a larger number of tokens simultaneously enhances the model's sophistication and depth in generating relevant outputs.

Neural Networks

Neural networks are structured to mimic the human brain, allowing computers to process data using interconnected nodes. These networks are essential for understanding complex patterns in data without explicit programming.

They form the backbone of many AI applications, making them critical in various fields, from healthcare to finance.

Transformers

Transformers are a specific type of neural network architecture that excels in processing sequences of data. This architecture employs an “attention” mechanism to determine how different parts of a sequence relate to each other.

Transformers have dramatically changed the landscape of generative AI since their introduction in 2017, allowing for faster training and more effective results.

RAG (Retrieval-Augmented Generation)

RAG enhances an AI's ability to generate accurate responses by incorporating external information into its processing. This approach allows models to check reliable sources when crafting answers, reducing the likelihood of hallucinations.

For instance, if an AI chatbot is presented with a question it cannot confidently answer based on its training, RAG enables it to search for the most relevant external data to provide a better-informed response.

Hardware Behind AI

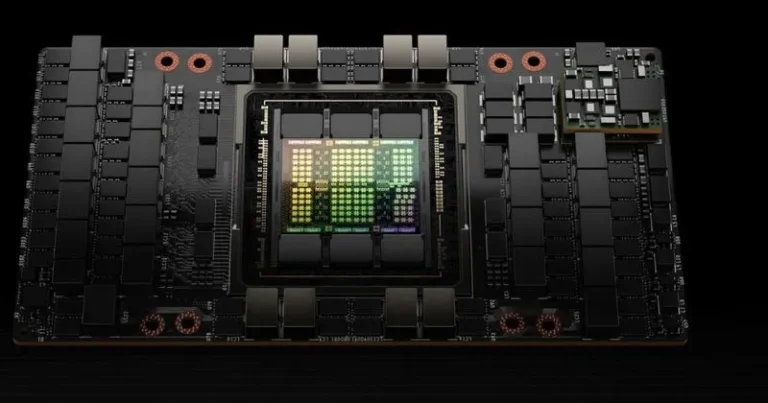

Nvidia’s H100 Chip

Nvidia’s H100 chip is one of the most sought-after graphics processing units (GPUs) for AI training. It’s recognized for its ability to handle demanding AI workloads, making it a favorite among tech companies.

The extraordinary demand for these chips has elevated Nvidia's market position. However, as more companies enter the AI chip market, this could change the competitive landscape.

Neural Processing Units (NPUs)

Neural Processing Units are specialized processors designed to perform AI inference directly on devices like smartphones and tablets. This functionality allows for more efficient AI-powered tasks, like enhancing video call quality with background blur.

Apple’s implementation of a “neural engine” exemplifies how NPUs can elevate device performance in AI applications.

TOPS (Trillion Operations Per Second)

TOPS is a performance metric used by tech vendors to demonstrate the capabilities of their chips in executing AI inference tasks. This metric helps consumers understand the potential of various hardware in handling complex AI workloads.

Key Players in the AI Landscape

As AI continues to evolve, several companies are at the forefront of its development. These players range from entrenched tech giants to innovative startups.

OpenAI has arguably brought AI into the mainstream with its release of ChatGPT, which captured significant public interest. Microsoft is integrating AI features into its products through Copilot, capitalizing on its investment in OpenAI.

Google is rapidly advancing its AI capabilities with Gemini, aiming to integrate AI across its services. Meta focuses on open-source AI models with its Llama initiative, fostering collaboration within the tech community.

Apple is also making strides, adding AI-driven features to its products under the Apple Intelligence banner. Anthropic, founded by former OpenAI employees, is working on developing safe AI systems and has attracted considerable investment.

Other notable mentions include Elon Musk’s xAI, which aims to innovate within the AI space, and Perplexity, known for its AI-powered search engine. Finally, Hugging Face serves as a platform for AI models and datasets, promoting accessibility and collaboration.

Final Thoughts

The world of AI moves at lightning speed, but don’t let that intimidate you. Keep this cheatsheet handy as your trusty AI decoder ring. Whether you’re chatting with ChatGPT, creating art with DALL-E, or diving deep with Claude, you’ve now got the lingo down pat.

Remember, these AI models are powerful tools, but they’re also surprisingly fun to explore. So go ahead – experiment, learn, and maybe even teach your AI assistant a joke or two. Just don’t forget to keep this guide bookmarked for when you need a quick refresh on your robo-vocabulary!