Disclosure: As an Amazon associate, I may earn from qualifying purchases

The development of algorithms and models that can automatically learn from data, make predictions, and improve their performance over time is the main goal of machine learning – a subfield of artificial intelligence.

It is a critical part of data science since it enables us to create predictive models that can be applied to solve challenging problems and make intelligent decisions, and is often the method opted after statistical analysis is applied to preprocessed data – in order to use the extracted insights to real-time projects.

Generally speaking, there are three types of machine learning algorithms: supervised learning, unsupervised learning, and reinforcement learning.

In supervised learning, the algorithm is trained on a labelled dataset with known inputs and outputs with the aim of discovering an input-output mapping.

Unsupervised learning aims to find patterns and relationships in the data by training the algorithm on an unlabeled dataset where the inputs are known but the outputs are not.

In reinforcement learning, the algorithm sharpens its learning by interacting with its surroundings and getting feedback in the form of incentives or punishments.

In data science, machine learning is frequently used in a vast array of applications, including recommendation systems, natural language processing, image recognition, and predictive modelling.

The field also enables us to make predictions and judgments based on data as well as automate difficult tasks that would otherwise need human understanding and involvement.

Machine learning packages and libraries are widely available in R, including caret, randomForest, and keras. Using a variety of algorithms and approaches, including support vector machines, neural networks, decision trees, and random forests, these packages let us create and train machine learning models.

In summary, machine learning is a valuable data science tool that helps us to develop predictive models and find solutions to critical problems.

R is a popular option among data scientists and analysts for developing and training machine learning models because of its extensive collection of machine learning tools and libraries.

It is a critical part of data science since it enables us to create predictive models that can be applied to solve challenging problems and make intelligent decisions, and is often the method opted after statistical analysis is applied to preprocessed data – in order to use the extracted insights to real-time projects.

Generally speaking, there are three types of machine learning algorithms: supervised learning, unsupervised learning, and reinforcement learning.

In supervised learning, the algorithm is trained on a labelled dataset with known inputs and outputs with the aim of discovering an input-output mapping.

Unsupervised learning aims to find patterns and relationships in the data by training the algorithm on an unlabeled dataset where the inputs are known but the outputs are not.

In reinforcement learning, the algorithm sharpens its learning by interacting with its surroundings and getting feedback in the form of incentives or punishments.

In data science, machine learning is frequently used in a vast array of applications, including recommendation systems, natural language processing, image recognition, and predictive modelling.

The field also enables us to make predictions and judgments based on data as well as automate difficult tasks that would otherwise need human understanding and involvement.

Machine learning packages and libraries are widely available in R, including caret, randomForest, and keras. Using a variety of algorithms and approaches, including support vector machines, neural networks, decision trees, and random forests, these packages let us create and train machine learning models.

In summary, machine learning is a valuable data science tool that helps us to develop predictive models and find solutions to critical problems.

R is a popular option among data scientists and analysts for developing and training machine learning models because of its extensive collection of machine learning tools and libraries.

Table of Contents

Overview of Popular Machine Learning Algorithms in R

R is a popular tool for putting machine learning algorithms into practice. Here, I will provide an overview of some of the common machine learning algorithms in R.

- Linear Regression: A supervised learning approach, this is used to forecast a continuous output variable based on one or more input variables. The lm() function in R can be used to perform linear regression.

- Logistic Regression: Again, a supervised learning approach – is used to forecast a binary output variable based on one or more input variables. The glm() function in R can be used to perform logistic regression.

- Decision Trees: This too being a supervised learning technique, decision trees are utilized for both classification and regression applications. The rpart() function in R can be used to create decision trees.

- Random Forest: To increase the model’s robustness and accuracy, Random Forest is an ensemble learning technique that combines multiple decision trees. The randomForest() function in R can be used to create these models.

- Support Vector Machines (SVM): SVM is a supervised learning algorithm that may be used for both regression and classification applications. The ‘e1071 package in R can be used to perform SVM.

- Neural Networks: Inspired by the structure and operation of the human brain, neural networks are a form of supervised learning system. The ‘kera’s package in R can be used to develop neural networks.

- K-Nearest Neighbors (KNN): The supervised learning algorithm K-Nearest Neighbors (KNN) is utilized for both classification and regression applications. And the ‘class’ package can be used to perform KNN in R.

- Principal Component Analysis (PCA): This is a kind of unsupervised learning algorithm that is used to carry out dimensionality reduction and feature extraction. The prcomp() function in R can be used to perform PCA.

The best – known machine learning methods in R don’t stop there. Depending on the particular objective and dataset, R offers a wide range of additional algorithms and variations.

Building Machine Learning Models with the 'caret' Package

A well-known resource for building machine learning models is the R package ‘caret’, which stands for ‘Classification and REgression Training’. It makes it simple to train and assess models and offers a unified interface for more than 200 different machine learning algorithms.

The caret package’s basic building blocks for creating a machine learning model are as follows:

The caret package’s basic building blocks for creating a machine learning model are as follows:

- Data Preparation: Before building a model, we must clean, process, and divide our data into training and testing sets.

- Choose a Model: Once our data is processed, we can use the caret package’s train() function to select a model and train it using our training data. The formula used as input for train() provides the outcome variable and the predictors.

- Tune the Model: After training our model, we can fine-tune its hyperparameters using the caret package’s tune() function. Before a model is trained, hyperparameters are values that have a bearing on the model’s performance.

- Evaluate the Model: After our model has been trained and fine-tuned, we can use the predict() method to generate predictions on our test data and assess the model’s performance using metrics like accuracy, precision, and recall.

Here is an illustration of how to construct a machine learning model using the caret package:

perl

library(caret)

# Load the iris dataset

data(iris)

# Split the data into training and testing sets

trainIndex <- createDataPartition(iris$Species, p = .8, list = FALSE)

trainData <- iris[trainIndex, ]

testData <- iris[-trainIndex, ]

# Train a random forest model

rf_model <- train(Species ~ ., data = trainData, method = "rf")

# Tune the model's hyperparameters

tuned_model <- tune(rf_model, ntree = seq(100, 500, 100), mtry = seq(2, 4))

# Make predictions on the testing data

predictions <- predict(tuned_model, newdata = testData)

# Evaluate the model's performance

confusionMatrix(predictions, testData$Species)

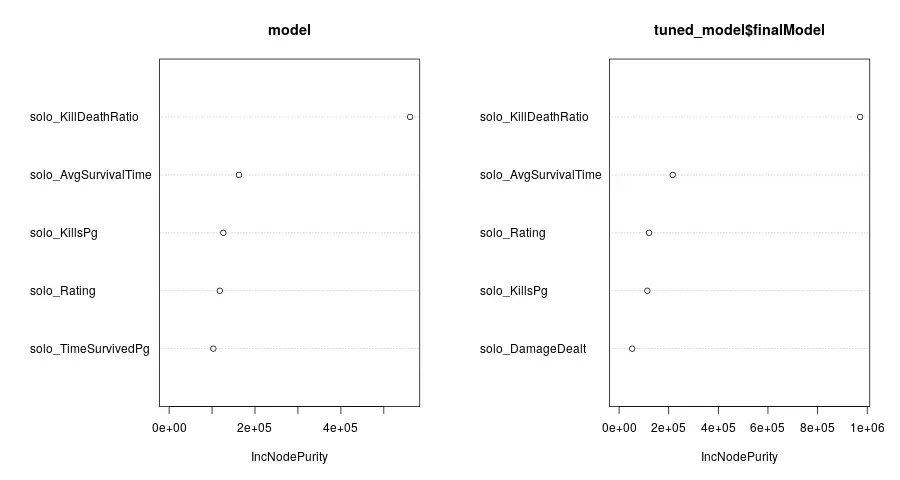

In this illustrative example, the iris dataset was divided into training and testing sets. A random forest model was then trained using the train() function and its hyperparameters were adjusted using the tune() function.

Predictions were then made using the predict() function on the testing data, and the model’s performance was evaluated using the confusionMatrix() function.

In essence, the caret package offers a powerful and adaptable framework for developing machine learning models in R.

Predictions were then made using the predict() function on the testing data, and the model’s performance was evaluated using the confusionMatrix() function.

In essence, the caret package offers a powerful and adaptable framework for developing machine learning models in R.

After a model has been successfully built, it can then be made accessible for end-users by deploying them through web apps and useful dashboards – developed with popular packages like ‘shiny’.

Alternative Approaches for Building ML Models

While the caret package is a known resource for building machine learning models in R, there are more packages and frameworks that can be employed instead. We will review some different approaches for developing ML models in R in this post, including TensorFlow, Keras, H2O, Tidymodels, XGBoost, and more.

Two open-source machine learning frameworks that have grown in popularity recently are TensorFlow and Keras. TensorFlow, a robust tool for building and refining machine learning models, was designed by Google.

It offers a low-level API for creating models from scratch as well as a high-level API called Keras that facilitates the rapid and effective construction of models.

Built on top of TensorFlow is the well-known neural network library Keras. With just a few lines of code, developers can build complex architectures using its straightforward, user-friendly interface for deep learning models.

Additionally, Keras supports a variety of backends, including as TensorFlow, Theano, and CNTK.

H2O is a popular machine learning package that aims to be quick, scalable, and simple to use. It encompasses a range of supervised and unsupervised learning algorithms, such as deep learning, random forests, and gradient boosting.

H2O acts as a complete platform for creating and deploying machine learning models because it includes many tools for data preprocessing, model tuning, and visualization.

The Tidymodels ecosystem is another alternate approach for developing ML models in R. In order to speed up the entire modelling process, from data preprocessing through model tuning and evaluation, Tidymodels is a collection of R packages that enable users to build models more efficiently.

It is a comprehensive and versatile framework for developing models as it includes packages for data wrangling (such as dplyr), feature engineering (such as recipes), and model training (such as parsnip).

Another tool for creating machine learning models in R is the ‘ranger’ package. Ranger is basically an implementation of ‘random forests’, a technique we talked about in this article previously.

It works well for both classification and regression applications. Ranger is made to be scalable and speedy, and it can handle large datasets with millions of observations and thousands of variables.

Yet another approach for building ML models in R is XGBoost. Gradient boosting is an effective ensemble technique that can improve the precision of predictive models, and XGBoost is an application of this method.

Machine learning contests on Kaggle have been won using XGBoost, which is intended to be fast, scalable, and accurate.

There are a variety of alternative tools and libraries available in addition to these packages and frameworks. Multiple models can be combined into an ensemble using the ‘caretEnsemble’ package, which can enhance performance and lessen overfitting.

The MXNet deep learning library, which is commonly used in the industry for its scalability and efficiency – is accessible through an R interface provided by the mxnet package.

Leveraging cloud-based platforms like Amazon SageMaker or Google Cloud AI Platform for constructing predictive models in R is an additional alternative. These platforms include a variety of pre-built algorithms, data processing tools, and automatic model tuning, as well as other tools and services for developing and deploying ML models.

Last but not least, it is important to note that some data scientists favor using computer languages other than R for this purpose. Scikit-learn, TensorFlow, Keras, and PyTorch are just a few of the machine learning modules and frameworks that are available in Python, a popular alternative to R.

Julia, Java, C++, and other programming languages are also frequently used for machine learning.

The decision of the tool or framework to use will depend on a number of aspects, each of which has benefits and drawbacks. It is crucial to take into account elements like performance, scalability, and the particular requirements of your project as well as ease of use.

These choices will be affected by the nature of data you have and how complex your model is.

Data scientists may choose the appropriate tool for their purposes and create models that are precise, efficient, and scalable by exploring these alternate approaches.

Two open-source machine learning frameworks that have grown in popularity recently are TensorFlow and Keras. TensorFlow, a robust tool for building and refining machine learning models, was designed by Google.

It offers a low-level API for creating models from scratch as well as a high-level API called Keras that facilitates the rapid and effective construction of models.

Built on top of TensorFlow is the well-known neural network library Keras. With just a few lines of code, developers can build complex architectures using its straightforward, user-friendly interface for deep learning models.

Additionally, Keras supports a variety of backends, including as TensorFlow, Theano, and CNTK.

H2O is a popular machine learning package that aims to be quick, scalable, and simple to use. It encompasses a range of supervised and unsupervised learning algorithms, such as deep learning, random forests, and gradient boosting.

H2O acts as a complete platform for creating and deploying machine learning models because it includes many tools for data preprocessing, model tuning, and visualization.

The Tidymodels ecosystem is another alternate approach for developing ML models in R. In order to speed up the entire modelling process, from data preprocessing through model tuning and evaluation, Tidymodels is a collection of R packages that enable users to build models more efficiently.

It is a comprehensive and versatile framework for developing models as it includes packages for data wrangling (such as dplyr), feature engineering (such as recipes), and model training (such as parsnip).

Another tool for creating machine learning models in R is the ‘ranger’ package. Ranger is basically an implementation of ‘random forests’, a technique we talked about in this article previously.

It works well for both classification and regression applications. Ranger is made to be scalable and speedy, and it can handle large datasets with millions of observations and thousands of variables.

Yet another approach for building ML models in R is XGBoost. Gradient boosting is an effective ensemble technique that can improve the precision of predictive models, and XGBoost is an application of this method.

Machine learning contests on Kaggle have been won using XGBoost, which is intended to be fast, scalable, and accurate.

There are a variety of alternative tools and libraries available in addition to these packages and frameworks. Multiple models can be combined into an ensemble using the ‘caretEnsemble’ package, which can enhance performance and lessen overfitting.

The MXNet deep learning library, which is commonly used in the industry for its scalability and efficiency – is accessible through an R interface provided by the mxnet package.

Leveraging cloud-based platforms like Amazon SageMaker or Google Cloud AI Platform for constructing predictive models in R is an additional alternative. These platforms include a variety of pre-built algorithms, data processing tools, and automatic model tuning, as well as other tools and services for developing and deploying ML models.

Last but not least, it is important to note that some data scientists favor using computer languages other than R for this purpose. Scikit-learn, TensorFlow, Keras, and PyTorch are just a few of the machine learning modules and frameworks that are available in Python, a popular alternative to R.

Julia, Java, C++, and other programming languages are also frequently used for machine learning.

The decision of the tool or framework to use will depend on a number of aspects, each of which has benefits and drawbacks. It is crucial to take into account elements like performance, scalability, and the particular requirements of your project as well as ease of use.

These choices will be affected by the nature of data you have and how complex your model is.

Data scientists may choose the appropriate tool for their purposes and create models that are precise, efficient, and scalable by exploring these alternate approaches.